Counterfactual Explanations for Human Gait Classification A novel explainability approach for human gait analysis

Aim and Research Question(s)

Expanding on the work performed by Slijepcevic et al. [1], this work aims to propose alternative explainability strategies using counterfactual explanations. Thereby, a novel explanation approach is presented for this domain and evaluated on the clinical validity. Using 3D gait data and two clinically relevant use cases (UC1 Valgus/Varus and UC2 with six gait patterns occurring in children with cerebral palsy), the thesis provides a robust baseline for these classification tasks and a strong foundation for further research on counterfactuals.

Background

In treating children with cerebral palsy (CP), instrumented gait analysis has been routinely utilized to help in clinical decision-making and assess treatment results. It is possible to categorize the gait patterns of children with cerebral palsy into clinically meaningful categories via the development of gait classification systems. As a clinician’s subjective opinion and personal experiences are involved in gait categorization, there may be more diversity across various gait analysts. Therefore, a strategy for objectively classifying and explaining gait in CP patients is necessary.

Methods

The first method based on counterfactual explanations is Ib-CFE [2], which classifies existing counterfactual occurrences and modifies discriminative portions of the time series and a counterfactual multivariate time-series explainability approach, CoMTE [3], that takes multivariate time series as input and returns the relevant variables and their counterfactuals.

Results and Discussion

The proposed models achieved comparable results with state-of-the-art models in the TSC field and were able to learn certain properties of the data in both use cases. According to the evaluations and experiments, Method Ib-CFE provides the more clinically valid explanations in comparison to CoMTE.

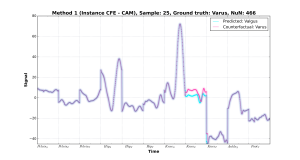

Sample 25 was classified wrongly as Valgus. The generated counterfactual by Ib-CFE (CAM) was evaluated as very plausible.

Sample 25 was classified wrongly as Valgus. The generated counterfactual by Ib-CFE (CAM) was evaluated as very plausible.

Conclusion

Experiments indicate that the generated counterfactuals as a whole can be concluded as plausible, by having more plausible or rather plausible results than implausible. By taking the diverse nearest unlike neighbors and both models, it can also be assumed that the methods are able to generate diverse counterfactuals and are able to give the domain expert more diverse alternatives in their explanations. Nevertheless, these indications need to be treated carefully. The samples provided cannot represent the whole domain and conclude that the methods generate meaningful counterfactuals in every sample and use case. For further research, a bigger dataset with more samples that are interesting for examination, a more extensive qualitative evaluation as well as the generation of plausible counterfactuals.

References

[1] Slijepcevic et al., “Explainingmachine learning models for clinical gait analysis,” ACM Transactions on Computing for Healthcare (HEALTH), vol. 3, no. 2, pp. 1–27, 2021. [2] Delaney et al., “Instance-based counterfactual explanations for time series classification,”arXiv preprint arXiv:2009.13211, 2020. [3] E. Ates et al., “Counterfactual explanations for multivariate time series,” in 2021 International Conference on AppliedArtificial Intelligence (ICAPAI), IEEE, 2021, pp. 1–8.